Window functions in Spark

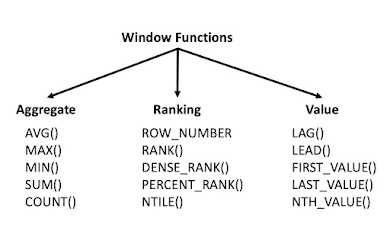

================================================-> Spark Window functions operate on a group of rows like partition and return a single value for every input row. Spark SQL supports three kinds of window functions:

a) Ranking functions

b) Analytic functions

c) Aggregate functions

Ranking Functions:

=============

-> ROW_NUMBER(): It is used to get a unique sequential number for each row in the specified data.

-> RANK(): It is used to provide a rank to the result within a window partition. This function leaves gaps in rank when there are ties.

-> DENSE_RANK(): is used to get the result with rank of rows within a window partition without any gaps. This is similar to rank() function difference being rank function leaves gaps in rank when there are ties.

-> NTILE(): It is used to distribute the number of rows in the specified (N) number of groups. Each row group gets its rank as per the specified condition. We need to specify the value for the desired number of groups.

✔️Without use of partition by :

The NTILE(2) shows that we require a group of two records in the result.

✔️With the use of Partition by:

The NTILE(2), each partition in department group is divided into two groups.

✔️ Code Snippet:

𝘷𝘢𝘭 𝘥𝘧 = 𝘚𝘦𝘲((101,"𝘔𝘰𝘩𝘢𝘯","𝘈𝘥𝘮𝘪𝘯",4000),

(102, "𝘙𝘢𝘫𝘬𝘶𝘮𝘢𝘳", "𝘏𝘙", 5000),

(103, "𝘈𝘬𝘣𝘢𝘳", "𝘐𝘛",9990),

(104, "𝘋𝘰𝘳𝘷𝘪𝘯", "𝘍𝘪𝘯𝘢𝘯𝘤𝘦", 7000),

(105, "𝘙𝘰𝘩𝘪𝘵", "𝘏𝘙", 3000),

(106, "𝘙𝘢𝘫𝘦𝘴𝘩", "𝘍𝘪𝘯𝘢𝘯𝘤𝘦",9800),

(107, "𝘗𝘳𝘦𝘦𝘵", "𝘏𝘙", 7000),

(108, "𝘔𝘢𝘳𝘺𝘢𝘮", "𝘈𝘥𝘮𝘪𝘯",8000),

(109, "𝘚𝘢𝘯𝘫𝘢𝘺", "𝘐𝘛", 7000),

(110, "𝘝𝘢𝘴𝘶𝘥𝘩𝘢", "𝘐𝘛", 7000),

(111, "𝘔𝘦𝘭𝘪𝘯𝘥𝘢", "𝘐𝘛", 8000),

(112, "𝘒𝘰𝘮𝘢𝘭", "𝘐𝘛", 10000))

𝘪𝘮𝘱𝘰𝘳𝘵 𝘴𝘱𝘢𝘳𝘬.𝘪𝘮𝘱𝘭𝘪𝘤𝘪𝘵𝘴._

𝘷𝘢𝘭 𝘥𝘧2 = 𝘥𝘧.𝘵𝘰𝘋𝘍("𝘪𝘥","𝘕𝘢𝘮𝘦","𝘋𝘦𝘱𝘢𝘳𝘵𝘮𝘦𝘯𝘵","𝘚𝘢𝘭𝘢𝘳𝘺")

𝘷𝘢𝘭 𝘸𝘪𝘯𝘥𝘰𝘸 = 𝘞𝘪𝘯𝘥𝘰𝘸.𝘱𝘢𝘳𝘵𝘪𝘵𝘪𝘰𝘯𝘉𝘺("𝘋𝘦𝘱𝘢𝘳𝘵𝘮𝘦𝘯𝘵").𝘰𝘳𝘥𝘦𝘳𝘉𝘺("𝘚𝘢𝘭𝘢𝘳𝘺")

𝘥𝘧2.𝘸𝘪𝘵𝘩𝘊𝘰𝘭𝘶𝘮𝘯("𝘳𝘰𝘸_𝘯𝘶𝘮𝘣𝘦𝘳",𝘳𝘰𝘸_𝘯𝘶𝘮𝘣𝘦𝘳.𝘰𝘷𝘦𝘳(𝘸𝘪𝘯𝘥𝘰𝘸))

.𝘸𝘪𝘵𝘩𝘊𝘰𝘭𝘶𝘮𝘯("𝘳𝘢𝘯𝘬",𝘳𝘢𝘯𝘬().𝘰𝘷𝘦𝘳(𝘸𝘪𝘯𝘥𝘰𝘸))

.𝘸𝘪𝘵𝘩𝘊𝘰𝘭𝘶𝘮𝘯("𝘥𝘦𝘯𝘴𝘦_𝘳𝘢𝘯𝘬",𝘥𝘦𝘯𝘴𝘦_𝘳𝘢𝘯𝘬().𝘰𝘷𝘦𝘳(𝘸𝘪𝘯𝘥𝘰𝘸))

.𝘸𝘪𝘵𝘩𝘊𝘰𝘭𝘶𝘮𝘯("𝘯𝘵𝘪𝘭𝘦",𝘯𝘵𝘪𝘭𝘦(2).𝘰𝘷𝘦𝘳(𝘸𝘪𝘯𝘥𝘰𝘸))

.𝘴𝘩𝘰𝘸()

.jpg)