File formats in Big data:

As a data engineer, quite frequently you will have to face use cases where you need to deal with different types of file formats.

Text file:

It is also called a flat file and is the simplest among all file formats. I mostly used it to store unstructured data like log files or have received raw delimited structured data in this format.

CSV File:

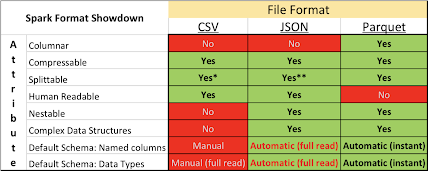

CSV files are a common way to transfer data. The files are not compressed by default. Pandas and pyspark support reading and writing to CSVs. This is the file format that I have used most to get data from the different source systems.

JSON:

JavaScript Object Notation is a lightweight data-interchange format. JSON is one of the best tools for sharing data of any size and type. That is one reason, many APIs and Web Services return data as JSON.JSON files are not compressed by default. Pandas and Pyspark support reading and writing to JSON. I have also used it to store the metadata or schema structure of my datasets and have also received responses from APIs in JSON format.

Apache Parquet:

This is a big data file format and stores data in machine-readable binary format, unlike CSV and JSON formats which are human-readable. It is columnar format and data is optimized for fast retrieval. By columnar format, it means data is written column by column. This is ideal for read-heavy analytical workloads. Parquet also stores metadata at the end of the files containing useful statistics such as schema info, column min, and max values, compression/encoding scheme, etc making them self-describing. This enables data skipping and allows for splitting the data across multiple machines for large-scale parallel data processing. Parquet supports efficient data compression and encoding schemes that can lower data storage costs. Most of my datasets are saved in this format. Pandas and pyspark support reading and writing to parquet files.

Apache Avro:

This is also another big data file format and stores data in binary format. It is a row-based data store which means that data is optimized for “write-heavy” workloads and data is stored row by row. Avro file format is considered in cases where I/O patterns are more write-heavy, or the query patterns favor retrieving multiple rows of records in their entirety. For example, the Avro format works well with a message bus such as Event Hub or Kafka that write multiple events/messages in succession. I have not used this format yet.

Protocol Buffers:

They are Google's language-neutral, platform-neutral, extensible mechanism for serializing structured data – think XML, but smaller, faster, and simpler. Used as API response.

Other file formats are Optimized Row-Columnar (ORC), PDF,XML,XLSX File.

Other file formats are Optimized Row-Columnar (ORC), PDF,XML,XLSX File.

.jpg)